Posted in 2021

About data on curvilinear or rotated regional grids

- 29 November 2021

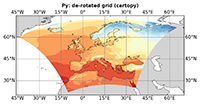

2D Climate data can be sampled using different grid types and topologies, which might make a difference when it comes to data analysis and visualization. As the grid lines of regular or rectilinear grids are aligned with the axes of the geopgraphical lat-lon coordinate system, these model grids are relatively easy to deal with. A common, but more complex case is that of a curvilinear or a rotated (regional) grid. In this blog article we want to illuminate this case a bit; we describe how to identify a curvilinear grid, and we demonstrate how to visualize the data using the “normal” cylindric equidistant map projection.

Data can not only be stored in different file formats (e.g. netCDF, GRIB), but also in different data structures. Besides its spatial dimension (e.g. 1D, 2D, 3D), we need to have a closer look at the grid and the topology used. As the time dependency of the data is encoded as the time dimension, a variable might be called a 3D variable although the spatial grid is only 2D.

DKRZ CDP Updates Nov 21

- 25 November 2021

including the new ICON-ESM-LR model primarily published at DKRZ.

A first ensemble set of simulations from the ESM ICON-ESM-LR for the DECK experiments is available including the experiments

How to install R packages in different locations?

- 25 October 2021

The default location for R packages is not writable and you can not install new packages. On demand we install new packages system-wide and for all users. However, it possible to install packages in different locations than root and here are the steps:

create a directory in $HOME e.g. ~/R/libs

DKRZ CDP Updates July 21

- 02 July 2021

We proudly 🥳 announce that the CDP is extended by new sets of CMIP6 data primarily published at DKRZ. We also published new versions of corrected variables for the MPI-ESM1-2 Earth System Models.

The ensemble set of simulations from the ESM MPI-ESM1-2-HR for the dcppA-hindcast experiment is completed by another 5 realizations (8.5TB). In total, this set consists of about 10 realizations for 60 initialization years in the interval from 1960-2019 resulting in 595 realizations and 31 TB. For each realization, about 100 variables are available for a simulation time of about 10 years.

DKRZ CMIP Data Pool

- 23 June 2021

We proudly announce new publications of model simulations when we publish them at our DKRZ ESGF node. We also keep you updated about the status and the services around the CMIP Data Pool. Find extensive documentions under this link.

How to install jupyter kernel for Matlab

- 10 June 2021

In this tutorial, I will describe i) the steps to create a kernel for Matlab and ii) get the matlab_kernel working in Jupyterhub on Levante.

conda environment with python 3.9

How to re-enable the deprecated python kernels?

- 16 May 2021

Within the maintenance of Monday, May 15th, we will perform updates in our python installations (please see the details here).

Since the jupyterhub kernels are based on modules, the deprecated kernels will no longer be available as default kernels in jupyter notebooks/labs.

Requested MovieWriter (ffmpeg) not available

- 06 May 2021

Requested MovieWriter (ffmpeg) not available

conda env with ffmpeg and ipykernel

How to containerIze your jupyter kernel?

- 04 May 2021

Containers are not supported on Levante at this point.

We have seen in this blog post how to encapsulate a jupyter notebook (server) in a singularity container . In this tutorial, I am going to describe how you can run a jupyter kernel in a container and make it available in the jupyter*.

Webpack and Django

- 29 March 2021

I recently started to modernize the JavaScript part of a medium sized Django site we run at DKRZ to manage our projects. We have used a version of this site since 2002 and the current Django implementation was initially developed in 2011.

Back then JavaScript was in the form of small scripts embedded into the Django templates. jQuery was used abundantly. All in all, JavaScript was handled very haphazardly because we wanted to get back to working with Python as soon as possible.

Create a kernel from your own Julia installation

- 23 March 2021

We already provide a kernel for Julia based on the module julia/1.7.0.

In order to use it, you only need to install ÌJulia:

Python environment locations

- 04 March 2021

Kernels are based on python environments created with conda,

virtualenv or other package manager. In some cases, the size of the

environment can tremendously grow depending on the installed packages.

The default location for python files is the $HOME directory. In

this case, it will quickly fill your quota. In order to avoid this, we

suggest that you create/store python files in other directories of the

filesystem on Levante.

The following are two alternative locations where you can create your Python environment: